Personal Identifiable Information (PII) is any data that could potentially be used to identify a particular Person / Company / Entity. Examples include a Social Security Name, Passport Number, Bank Account, Email or Telephone etc.

Use case for PII Removal might arise for

- HIPPA Compliance

- Security Compliance

- Data Subsetting for Staging / Testing / Dev Environments

Goal

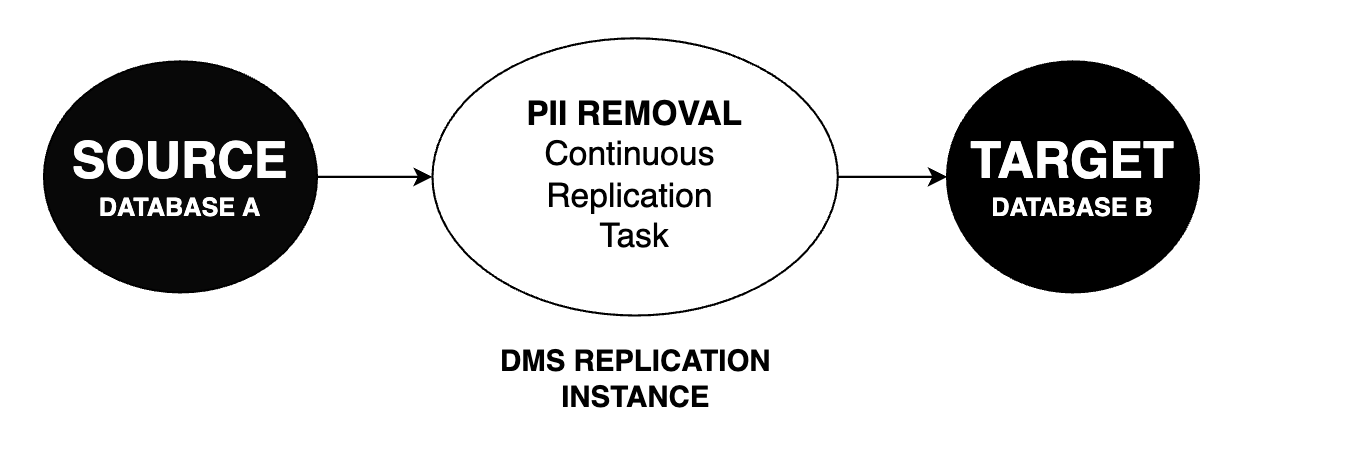

The goal of this article is to establish a replication task through which we continuously replicate data from a source to a clean PII removed database instance.

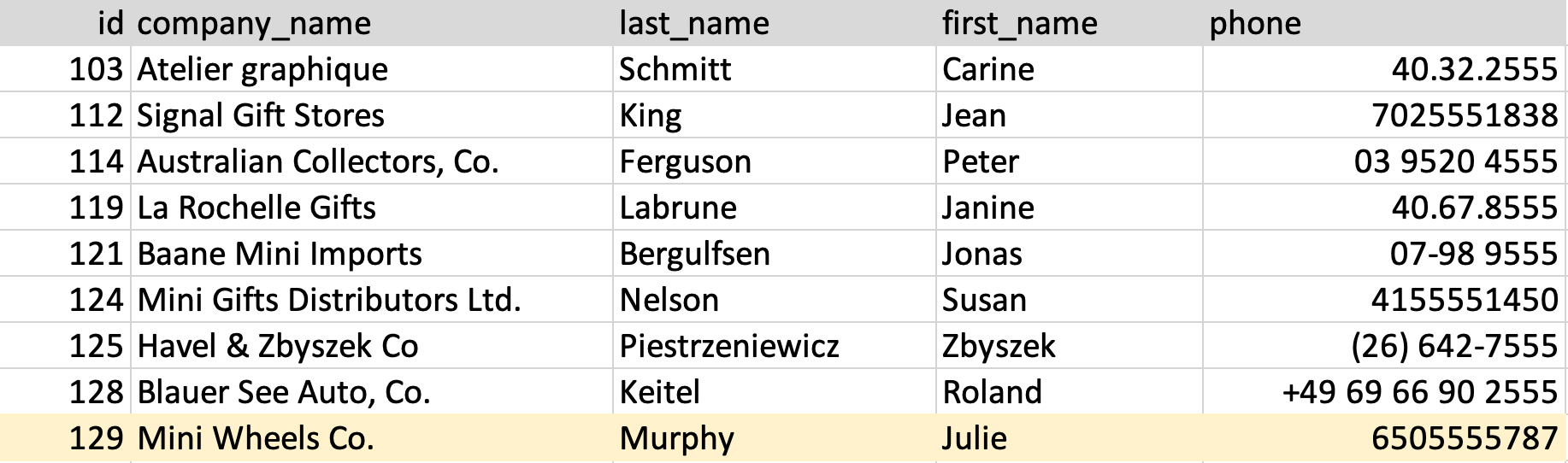

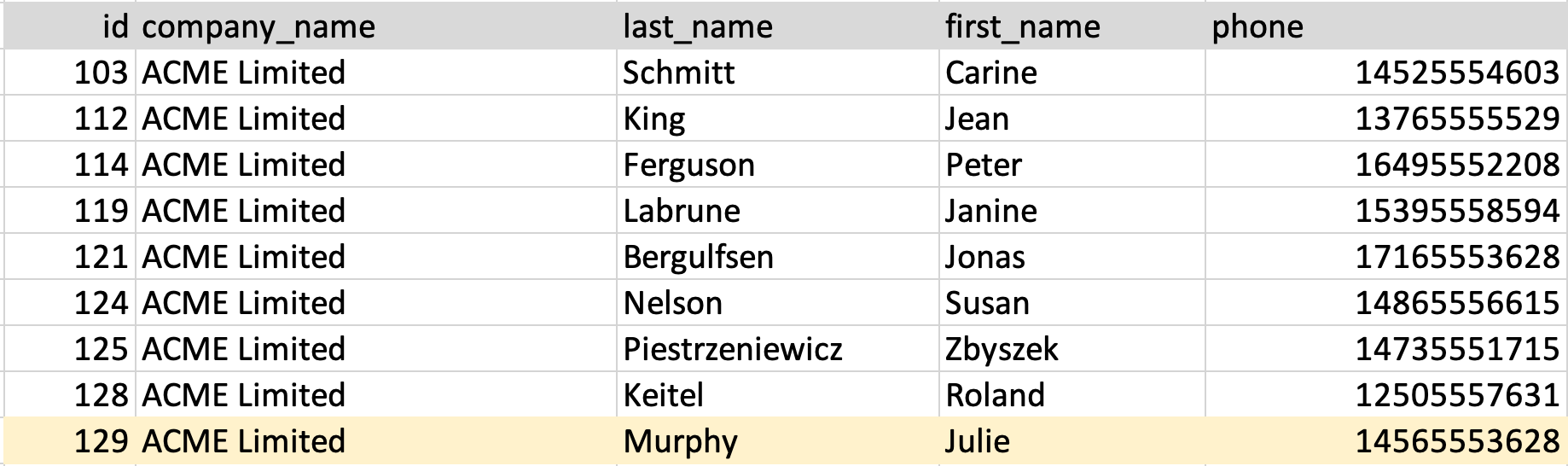

Given we have a table Companies in source Database, we want to be able to have the same table Companies in the DATABASE B with original content transformed from DATABASE A as needed

With continuous changes, we should be able to import new rows as needed.

Assumptions

This blog outlines an approach to removing PII, it is assumed that you are able to setup Databases with Logical Replication which is needed for continuous changes. For more information on DB requirements for DMS please visit AWS CHAP Source

Requirements

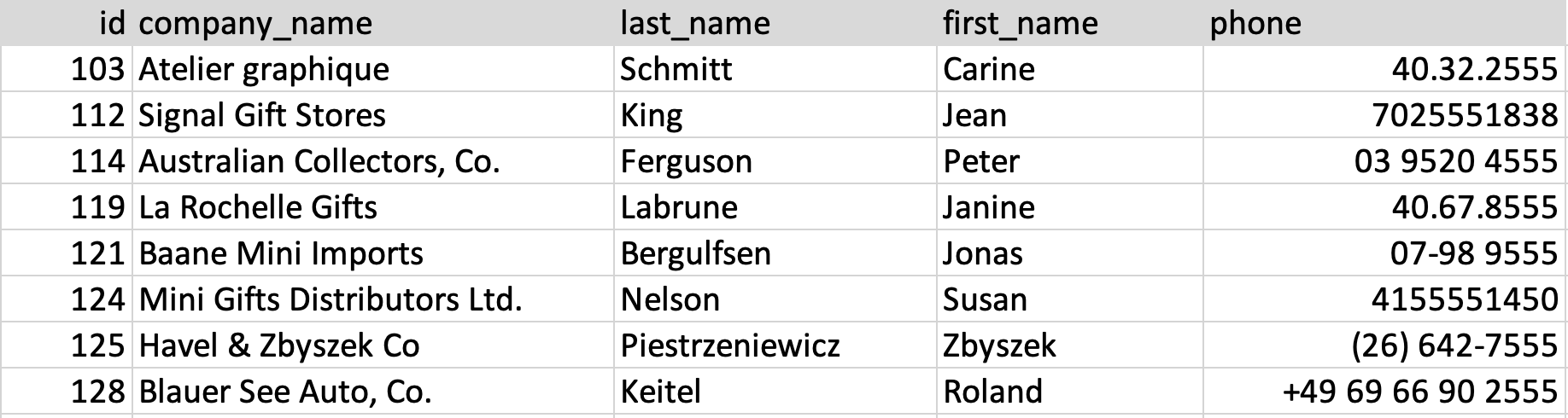

We want to remove PII for 2 columns

company_name: Replace with ACME Limitedphone: Replace with a Randomly generated phone number

As a first step we will duplicate columns company_name and phone to company_name_cleaned and phone_cleaned with transformed data

and remove the original columns.

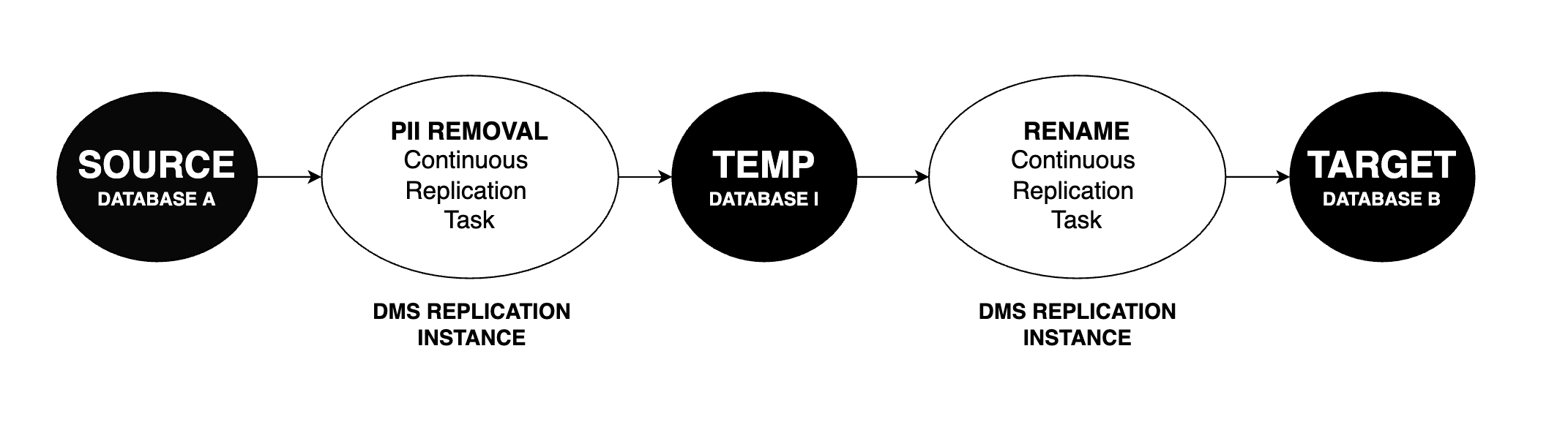

For Logical Replication to work, we cannot directly update data into columns and have to make new columns for transformed data. To overcome this restriction we will use an intermediary DATABASE I.

In a later step, we will rename the columns back to their original name.

So the PII removal setup will look something like

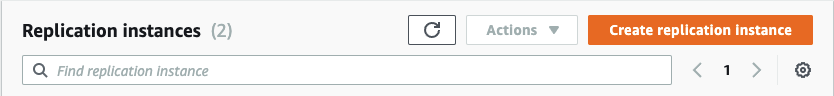

Step1. Create a Replication Instance

A replication instance is nothing but an EC2 instance, which lets you run the migration task on it. You are charged according to the EC2 instance size you choose which will vary according to your data needs. More information on selecting a replication instance can be found at AWS CHAP Replication.

Step 2. Create EndPoints

End Points are databases ( Source / Target )

Note, an endpoint cannot act as both Source and Target and has to be added separately.

In our case we will have 4 EndPoints

- Source DATABASE A

- Target DATABASE I

- Source DATABASE I

- Target DATABASE B

Step 3. Migration ( DATABASE A to DATABASE I )

Start by Creating a DMS task and adding the following Table Rules.

We are going to create a Database replication Task with have 5 rules

- Selection for tables which we want to migrate

- Remove original column

company_name - Remove original column

phone - Transform data from

company_nametocompany_name_cleaned - Transform data from

phone tophone_cleaned`

More information can be found at AWS DMS Table Mappings.

1. Selection for Tables which want to migrate

Wildcards are available within DMS. More information at AWS DMS WildCards.

{

"rules": [

{

"rule-type": "selection",

"rule-id": "1",

"rule-name": "1",

"object-locator": {

"schema-name": "%",

"table-name": "%"

},

"rule-action": "include",

"filters": []

}

]

}

2. Remove Original Columns Company Name

{

"rules": [

{

"rule-type": "transformation",

"rule-id": "2",

"rule-name": "2",

"rule-target": "column",

"object-locator": {

"schema-name": "%",

"table-name": "companies",

"column-name": "company_name"

},

"rule-action": "remove-column"

}

]

}

3. Remove Original Column Phone

{

"rules": [

{

"rule-type": "transformation",

"rule-id": "3",

"rule-name": "3",

"rule-target": "column",

"object-locator": {

"schema-name": "%",

"table-name": "companies",

"column-name": "phone"

},

"rule-action": "remove-column"

}

]

}

4. Transform data from company_name to company_name_cleaned

"rules": [

{

"rule-type": "transformation",

"rule-id": "4",

"rule-name": "4",

"rule-target": "column",

"object-locator": {

"schema-name": "%",

"table-name": "companies",

"column-name": "phone"

},

"rule-action": "add-column",

"value": "company_name_cleaned",

"expression": "'ACME Limited'",

"data-type": {

"type": "string",

"length": 8000

}

}

]

5. Transform data from phone to phone_cleaned

"rules": [

{

"rule-type": "transformation",

"rule-id": "5",

"rule-name": "5",

"rule-target": "column",

"object-locator": {

"schema-name": "%",

"table-name": "companies",

"column-name": "phone"

},

"rule-action": "add-column",

"value": "phone_cleaned",

"expression": "'+1' || (ABS(RANDOM()) % (999 - 100) + 100) || '555' || (ABS(RANDOM()) % (9999 - 1000) + 1000)",

"data-type": {

"type": "string",

"length": 8000

}

}

]

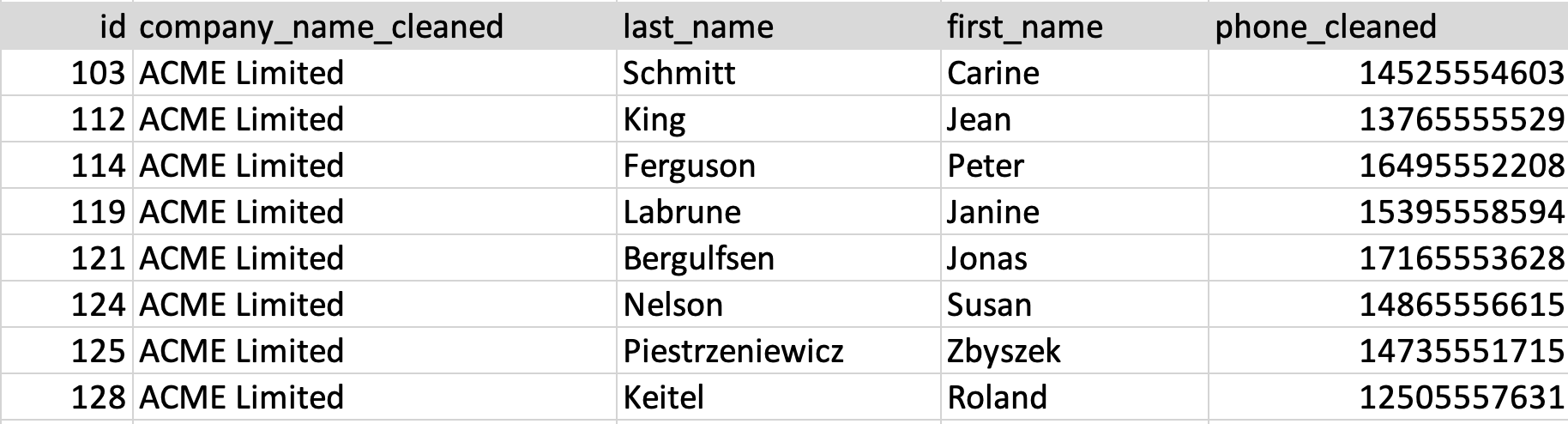

After the above task is created an run we will end up with a Companies in DATABASE I like so

Step 3. Migration ( DATABASE A to DATABASE I )

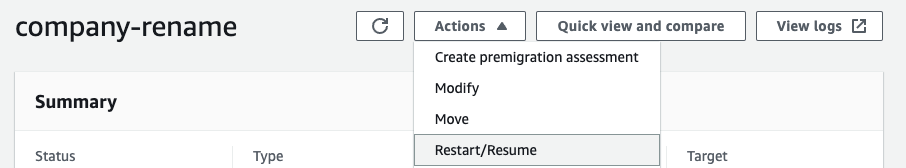

We will simply rename columns from DATABASE I to DATABASE B

We will be writing 3 Rules

- Selection

- Rename

company_cleanedto company - Rename

phone_cleanedto phone

1. Selection

We are again selecting all Tables as in the previous step.

{

"rules": [

{

"rule-type": "selection",

"rule-id": "1",

"rule-name": "1",

"object-locator": {

"schema-name": "%",

"table-name": "%"

},

"rule-action": "include",

"filters": []

}

]

}

2. Rename company_cleaned to company

{

"rules": [

{

"rule-type": "transformation",

"rule-id": "2",

"rule-name": "2",

"rule-action": "rename",

"rule-target": "column",

"object-locator": {

"schema-name": "%",

"table-name": "companies",

"column-name": "company_cleaned"

},

"value": "company"

},

]

}

3. Rename phone_cleaned to phone

{

"rules": [

{

"rule-type": "transformation",

"rule-id": "3",

"rule-name": "3",

"rule-action": "rename",

"rule-target": "column",

"object-locator": {

"schema-name": "%",

"table-name": "companies",

"column-name": "phone_cleaned"

},

"value": "phone"

},

]

}

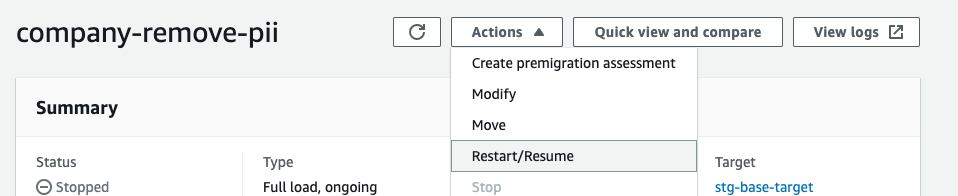

Step 4. Run the Task

In the last step we will run both the Tasks created.

Change Data Capture (CDC)

On adding new rows to the original source table, it will automatically reflect in the target database in a few seconds.

For more information please visit AWS CDC Native Support

Conclusion

In this post, we showed how we can use AWS DMS transformation with SQLite expressions to mask data during replication. We presented various scenarios associated with masking PII only or a combination of PII and non-PII datasets.

AWS DMS is feature rich and has various other options worth exploring like AWS Data Validation and API Triggers.