Subsetting a database is a method of using a prototypical sample of our data in a way that preserves the integrity of our database. If we try doing this naively, e.g., just dividing 10% of all the tables in our database, we may end up breaking the foreign key constraints and getting a statistically non-representative data sample. This is where Tenser AI’s Condenser comes into play.

Condenser is a configuration-driven database tool used for subsetting through Postgres and MySQL.

One of its greatest utilities is that it scales down a production database to a more rational size so that it can be deployed in staging, test, and development environments. This is highly cost-effective, and when used in tandem with PII removal, can enhance productivity levels to a great extent.

Another use-case is copying particular rows from one database and inserting them into another while ensuring its referential integrity.

Why do we need to subset data?

Here’s a list of situations where we need to subset our database:

-

We want to deploy our production database in staging or test environments, without PII(Personal Identifiable Information) but the database is too huge for that.

-

We might prefer a test database that contains only the particular rows and tables that we require.

-

We want to share data with others without PII.

The Challenges of Subsetting

Subsetting Data is not a piece of cake, which is why we need an open-source subsetting tool like Condenser. So, before we deep dive right into the process of data subsetting, let’s look at the challenges you’d face and how Condenser can help in subsetting database -

-

Foreign Key handling - In what ways the foreign keys are handled is fundamental to the subsetting process. Condenser is a reasonable fit for almost all kinds of foreign keys and it can subset a variety of databases. It also allows a myriad of configurations in terms of foreign key constraints. Using Condenser, we can import foreign keys via JSON if our database doesn’t have foreign key constraints.

-

Supporting open-source database - MySQL is significant to the process of data subsetting. Without MySQL, data migration cannot take place safely on our production database. The commercial subsetter - Condenser allows MySQL and Postgres and comprises far more advanced algorithms for subsetting very large databases, alongside additional subsetting options.

How to subset database using Condenser?

This blog provides a detailed overview of solving a subsetting problem on a real production database. Let’s begin -

Step 1: Install Condenser using Python 3.5+

- To begin with, download the required Python modules - toposort, psycopg2-binary, and mysql-connector-python. We can use pip for easy installation.

$ pip install toposort

$ pip install psycopg2-binary

$ pip install mysql-connector-python

-

Install Postgres and/or MySQL for managing database. We have to use

pg_dumpandpsqltools for Postgres - integrate it to our$PATHor point to them with$POSTGRES_PATH. In case, we are usingMySQL, we needmysqldumpandmysql- we can either integrate it to our$PATHor point to them with$MYSQL_PATH. -

Now, let’s download this repo. It can be either cloned or downloaded as a zip.

-

Next, we have to set up our configuration and save it in

config.json. Look at the example below-

"first_target": [

{

"table": "public.target_table",

"percent": 1

}

]

This will collect 1% of the tables’ data named public.target_table.

In order to set up the configuration,

we have to provide the source and destination database connection details,

and subsetting goals in initial_targets in our config.json file.

- Now, we have to run to complete the installation using

$ python direct_subset.py.

Step 2: Build your Configuration File

We can start by building our configuration file. For example, if we want our end result to be subsetting 1% of our original table to the users’ table, then our Condenser code should be -

{"desired_result": {"table": "user", "percent": 1}}

Our database may have several other tables that are needed by the back-end system and cannot be subsetted such as -

{"passthrough_tables": ["region", "api_settings"]}

Not to mention,

many database systems have thousands of tables,

and whether it’s direct or iterative subsets,

it can be difficult to list all the passthrough tables and sort them.

Therefore,

we have to deploy the notion of a passthrough_threshold,

an integer field that demonstrates the environment to treat any table with fewer rows than the threshold as a passthrough table.

Something like this -

{"desired_result": {"table": user", "percent": 1}},

{"passthrough_tables": ["region","api_settings"]},

{"passthrough_threshold": 100}

It will enable the system to consider any table under 100 rows as a passthrough table.

Step 3: Add connection info for source and destination databases

We should also specify the connection information for both the source and destination databases,

before running the condenser.

This can be done through the .source_db_connection_info and .destination_db_connection_info files.

Look at the code below to see how the destination has been specified.

The source should have the same fields as this one -

"db_type": "postgres",

"source_db_connection_info": {

"user_name": "user",

"host": "host.host.com",

"db_name": "source_db",

"port": 5432

Note:

There is also an optional “password” field. We can mention it in the file, otherwise, we have to input it everytime we run Condenser.

Step 4: Remove PII from your database

Personal Identifiable Information (PII) is any data that could be used to identify a particular Person / Company / Entity. Some examples are Social Security Name, Passport Number, Bank Account, Email or Telephone etc.

These are highly-confidential data that may be required to be masked and converted into some dummy digits/codes for security compliance.

Let’s look at the flow below for a better understanding -

Source (Initial Database) →→→ PII Removal using Condenser →→→ Target(Final Database)

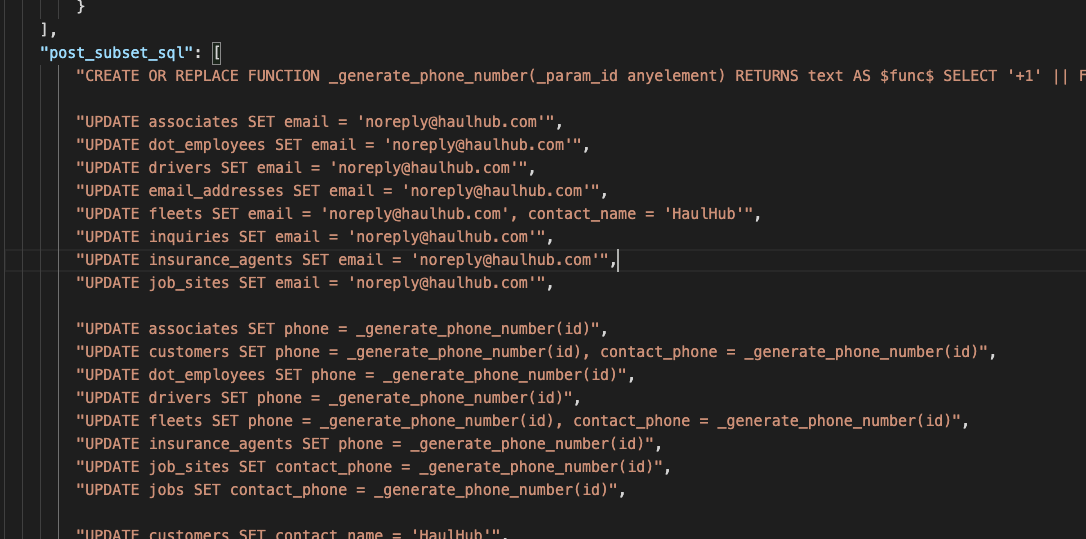

We can do this by using Condenser via post hook in config.json

while subsetting the database.

By deploying post_subset_sql,

we can set queries to replace the functions(PII) with some random numbers.

Here is an example given below -

Step 5: Run the Result

Now that we have removed the PII and subsetted the database, it’s time to run the program. The end result will be a new database containing only the subsetted data of 1% from the original data without PII.

Final Thoughts

In this post,

we talked about how we can use Condenser with Postgres and MySQL to subset database.

We also explained the process of masking data like Personal Identifiable Information(PII) using post hook within Condenser.

We hope this blog was useful and you learnt a few things about database subsetting.

To know more about PII removal,

read our blog on

AWS DMS.