What is Scalability Testing?

Performance testing is a type of Non-Functional testing, that is done to measure the responsiveness and stability of the system under a particular workload.

Both Load and Scalability tests fall under the umbrella of Performance testing, but there is a the fundamental difference in their purpose.

Load testing is a process to determine the maximum load after which the application crashes and users are not able to access the system, whereas Scalability testing measures the minimum and maximum load at all levels: Software, Hardware and Database. Here, the goal is to determine when the system is not able to handle any more users.

Using the results, the developers perform reverse engineering and analyze the reasons why the application doesn’t scale. They might need to implement new methods, remodel the architecture, scale up the hardware etc. Running scalability tests is an iterative process with a gradual increase in load till we have a system that supports a maximum expected number of users with a quality User Experience.

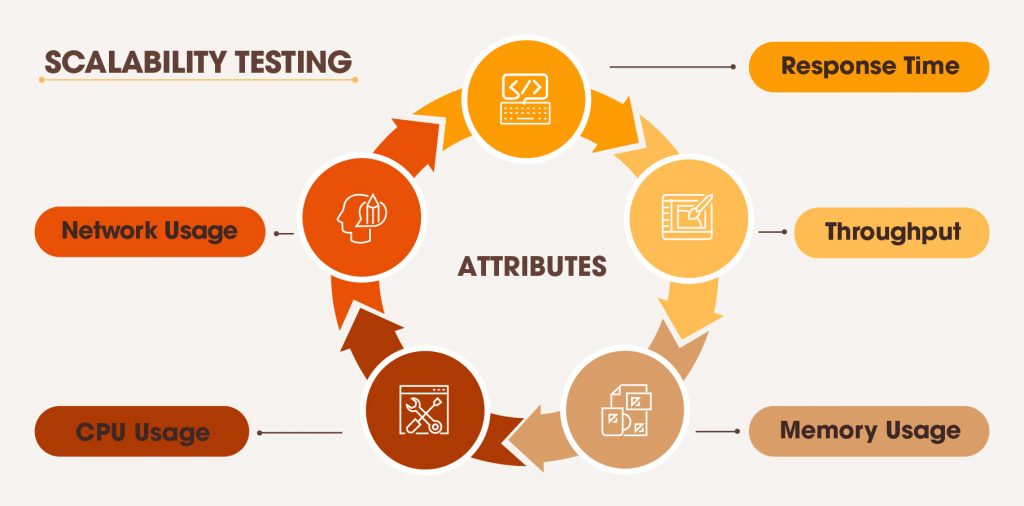

The tests should be done right from the initial functional release to identify the key performance problems and confront the design decisions early in the Development Cycle. The below image shows the main attributes to consider in our Scale tests.

Creating User Profile

‘Creating user profile’ is the key step in setting our goals for scale tests. Knowing how the users will access the application in real-time helps us simulate the actual user behavior and predict the right load on the system. There are various factors to consider while defining the user profile. For instance, what does our application do? What time of the day/ year is it used the most? What kind of users will we have? Where are servers located? And many more.

For ease of understanding, we created a simple profile for our application as described below:

-

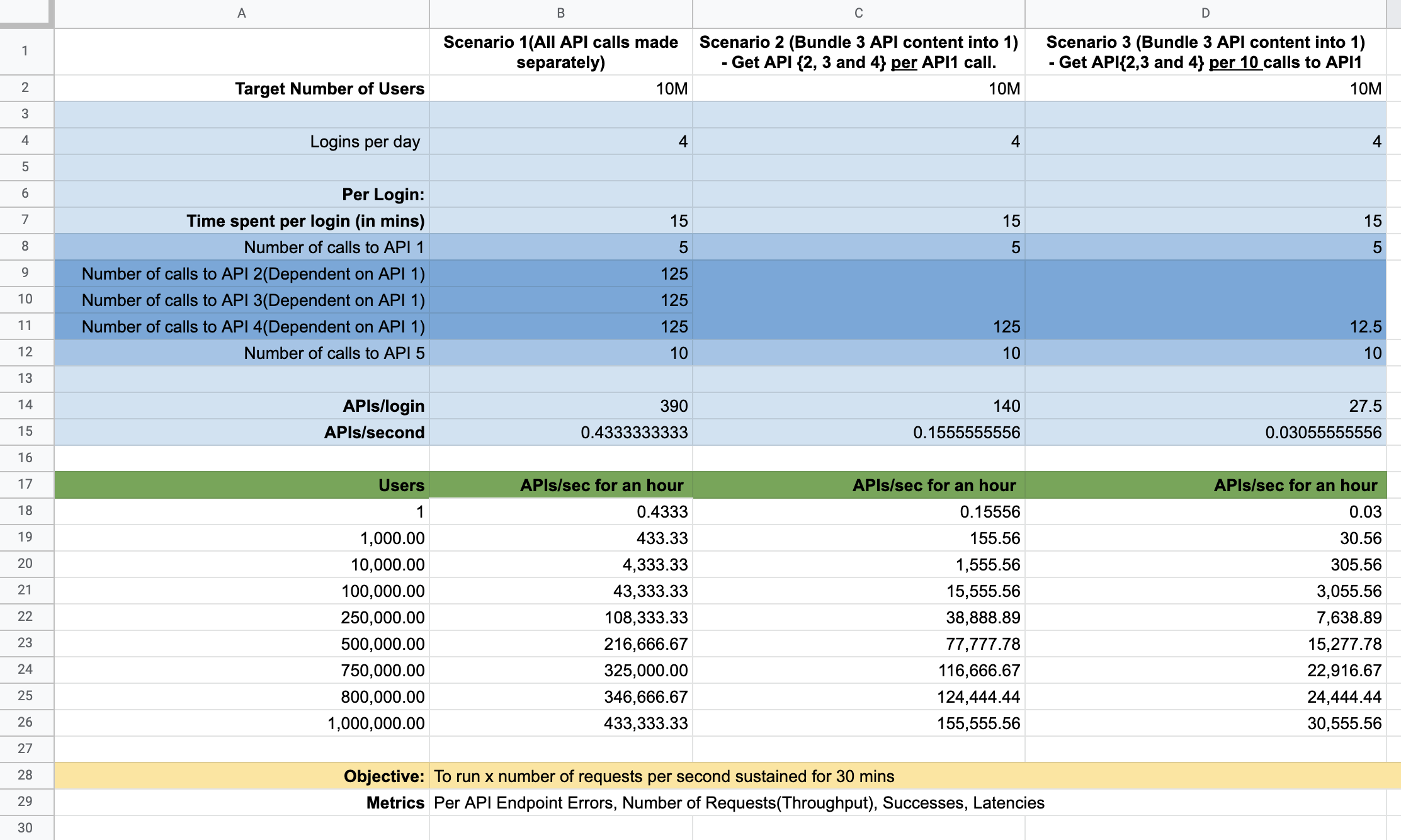

Let’s say our target number of users is 10M!

-

A user Logs in 4 times per day for 15 mins each. (That makes an hour of usage)

-

In each session, we call 5 APIs. (Calls per API is defined in the sheet below)

Here, we have defined 3 Scenarios on the API level:

-

First one is where all API calls {1, 2, 3 , 4 & 5} are made separately.

-

Second(with bundling), where API {2, 3 and 4} are bundled in 1 request ‘per call’ to API 1.

-

Third(with batching), where API {2, 3 and 4} are bundled in 1 request ‘per 10 calls’ to API 1.

Here, we have calculated the ‘API calls/login’, ‘API calls/sec’ & ‘API calls/hour’ and extrapolated the results to 10M users. For each scenario, the number of API calls is reduced significantly but we need to assess all 3 cases to test how our current system performs.

Setting Baseline:

Along with User Profiling, there are some other important questions that we need to define before starting the scaling process:

-

Is our system flexible enough to accommodate the defined number of users?

-

Do we have enough data on our system to give accurate performance metrics?

-

For how long can the current architecture accommodate with minimum latency? If not, what are the changes that might be required to achieve our goal? The changes required can be at the API level, DB level, or Infrastructure level.

-

Which tools are we going to use to run and analyze our scale tests? Factors to consider here are - Ability to run concurrently tests for multiple users, ability to configure different server locations, reliable performance insights (Response Time, Throughput, CPU Usage, Memory Usage, Network Usage) etc.

With these well-defined goals, we are ready to run our tests. While there are many tools available today, we have chosen k6 to run the load tests for our application and New Relic to check Backend metrics.

About k6

k6 is an open-source load testing tool that supports scripting in JavaScript ES2015/ES6. It also has a paid cloud version which comes which a bunch of additional features including:

-

Scale tests from multiple locations.

-

Test Builder and Script Editor.

-

Graph representation for performance insights.

Read the Guide to install k6 for a specific operating system.

For testing our application, we are using k6 Cloud as the open-source version only supports up to 50 VUs(virtual users). We can read more about k6 test scripts here.

Test Configuration

k6 is very easy to configure.

We can set

Options

according to the scenario, we are running.

Here is an example of the option set in our script.js file:

export const options = {

vus: 800, // Number of virtual users

duration: "30m", // Maximum duration of the test run. During this time k6 will make as many requests as possible.

stages: [

{ duration: '5m', target: 200 },

{ duration: '15m', target: 800 },

{ duration: '10m', target: 400 },

],

thresholds: {

http_req_failed: ['rate<0.01'], // http errors should be less than 1%

http_req_duration: ['p(95)<200'], // 95% of requests should be below 200ms

},

ext: {

loadimpact: {

projectID: 3562625, // required to run script on the cloud

// Test runs with the same name groups test runs together

name: "Scale Tests"

}

}

};To run the script from the Terminal,

we need to login into the cloud using our username

and password

& then run the command: k6 cloud script.js.

More details are mentioned

here.

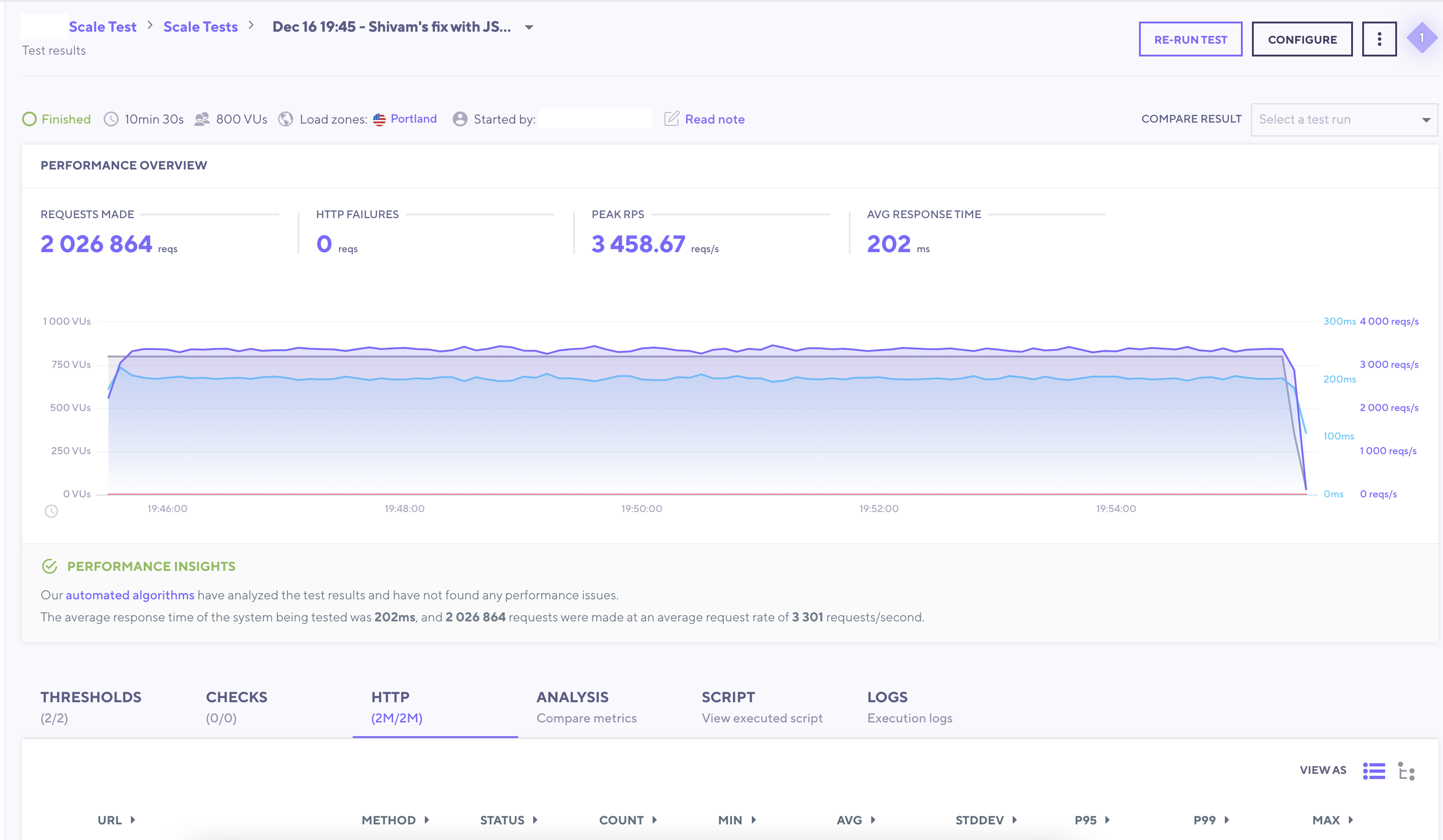

k6 Dashboard

The cloud dashboard gives us a clear insight into the performance metrics like peak RPS, average response time, failure, total number of requests made, duration, etc. It also gives us other important information such as API URL, Method used, Status, P95 and P99.

Analysing Test Results and Next Steps

Now that we have a fair idea about k6 and scalability testing, we are ready to create the script(Create script per scenario) and configure tests(set the number of VUs, duration, etc) accordingly.

First, we can start with 1 VU and see how the system performs and gradually increase the number of users and requests per user(can use either duration or iteration).

Capture the Average Response Time, Failures and Latencies using k6.

Also, we need to observe the scalability attributes: Response Time, Throughput, CPU Usage, Memory Usage, Network Usage from New Relic.

Note that this is just the starting point. From here we need to Experiment and Manipulate the system to reach our performance goals.

Final Thoughts

Scalability is crucial for any app’s success. The fact that we can reach the expected results in our testing gives us the confidence to accommodate growth, provide a seamless User Experience and eliminate the risk of fire-fighting situations in the future.