One of the most exciting features of Ruby 3 is Fibers. Initially released in Ruby 1.9 as a coroutine abstraction, fibers have never really become mainstream. Ruby 3 now changes the game by introducing the Fiber::SchedulerInterface class which allows for quick and more efficient context switching when compared to its Thread counterpart.

Fibers are light-weight workers which appear like Threads but have major advantages, mainly they are more memory efficient and allow programmers to control code segment pausing and resuming. On an application level fibers allow for non-blocking I/O wait. Meaning that when a Fiber is accessing an I/O service, it can give up control to another Fiber and resume processing once the I/O service has been completed. This context switching of fibers is managed by the Fiber Scheduler. The Falcon Rack web server uses Async Fibers internally. Since it does not need to block on I/O, it allows Falcon to serve more requests concurrently.

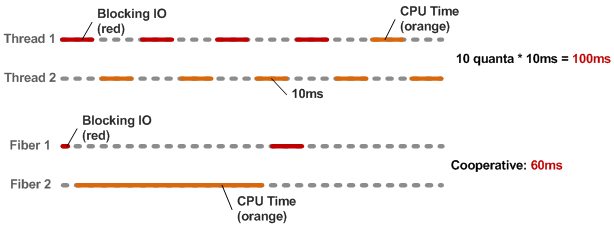

More “traditional” systems manage to block I/O calls by allocating a separate operating system (OS) thread for every request. This allows them to make blocking system calls for reading from and writing to sockets and files or any other blocking I/O calls. However, OS threads are relatively expensive to create and they require a context switch to the OS whenever another thread needs to run. This leads to a lot of overhead.

Fibers manage this overhead as they have been created to be very lightweight. Since they consume very little memory many fibers operate together inside a thread but at most, only one fiber per thread can run at a time. There’s always a currently active fiber. Even when not using fibers or doing any fiber-related work, the code is still running in the context of the main fiber for the current thread, which is created automatically by the Ruby runtime for each thread. Therefore, fibers are not meant to speed up computationally intensive tasks since splitting the process up into multiple small parts does not make a difference here. However, many Ruby processes spend much of their time waiting on I/O, such as waiting for the responses of API and database calls or waiting to read more of an HTTP request from a network socket. This is the use case where fibers shine!

Now let’s look at Ruby Fibers on a base level. Fiber is simply an independent execution context that can be paused and resumed programmatically.

> f = Fiber.new { puts "Ruby Fibers are awesome!" }

=> #<Fiber:0x00007fa5860e2fa8@(irb):1 (created)>The fiber has just been created now. It has not been executed.

> f.resume

=> Ruby Fibers are awesome!Not so different from threads, yet! The awesome thing about fibers is that they can be paused and resumed.

> f = Fiber.new { puts "Ruby Fibers are awesome!"; Fiber.yield; puts "Rails should adopt them ASAP!" }

=> #<Fiber:0x00007fa5860e2fa8@(irb):1 (created)>

> f.resume

=> Ruby Fibers are awesome!

> f.resume

=> Rails should adopt them ASAP!Fiber.yield is not the traditional Ruby yield

which acts as a sort of a pipe from a calling

method to the main execution.

Rather, it is used by the Fiber Scheduler to determine

what state each fiber is in

and what can be concurrently executed next.

This is how fibers achieve quick context switching.

Fibers can also access their memory store, despite all the pausing and resuming.

factorial = Fiber.new do

count = 1

loop do

Fiber.yield (1..count).inject(:*)

count += 1

end

endThe loop defines the area in the fiber

that gets called on every subsequent resume call.

All areas before the loop are called only once,

on the first resume.

Let’s see how it performs!

> factorial.resume

=> 1

> factorial.resume

=> 2

> factorial.resume

=> 6

> factorial.resume

=> 24Fantastic! But how is it that different from threads?

Fibers are much more lightweight and memory-efficient than threads. A major reason for this is that the operating system runs threads and decides when to run and pause to achieve concurrency. However, with fibers, the programmer specifies when to start and when to stop. While threads run in the background doing their task a fiber becomes the main program someone stops it.

The Ruby MRI uses a fair scheduler which means that each thread is given an equal time to run (10ms quantum) before it is suspended and the next thread is put in control. If one of the threads is waiting on a blocking call during those 10ms, it is essentially a waste of computing time. By contrast, Fibers force the programmer to do explicit scheduling which can certainly add to the complexity of the program, but offer us the full flexibility of determining how our CPU resources are used and also help us avoid the need for locks in mutexes in our code! It can be seen that fibers are cheaper to create and perform better than threads.

Further Reading

While fibers provide a delightful interface to work with concurrency the real drawback of Ruby and other GIL (global interpreter lock) based languages is that the execution of threads is limited to only one native thread (per process) at a time. However, the advent of Ractors which work around Ruby’s global VM lock (GVL), offer better (actual) parallelism.

Ractor is an Actor-Model like concurrent abstraction designed to provide a parallel execution without thread-safety concerns. Ractors allow threads in different ractors to compute at the same time. Each ractor has at least one thread, which may contain multiple fibers. Inside a ractor, only a single thread is allowed to execute at a given time.

It will be interesting to see how Ruby evolves!