We often come across situations where app deployment to Production fails due to some breaking change. At this point, we have two options - either revert or fix it. Generally, we go for revert. In order to revert, we have to revert a code from production, wait for the CI to complete, and create the deployment again. And, this entire process usually takes us a while, leading to a disruption in app functioning, followed by significant monetary loss.

To handle these risks associated with the deployments, we need to have definite strategies to handle them, for example.

- We need to make sure the new version should be available to the users as early as possible.

- And, in case of failure, we should be able to roll back the application to the previous version in no time.

There are mainly two strategies when it comes to deploying apps into production with zero downtime:-

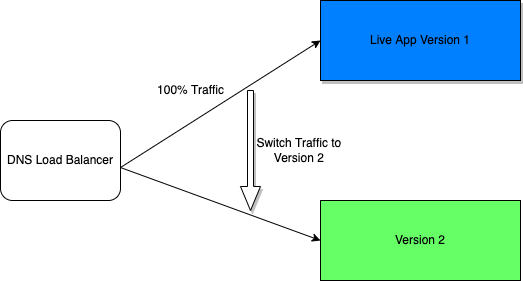

- Blue/Green Deployment:- It reduces downtime and risk by running two identical production environments called Blue and Green. So, instead of updating the current production (blue) environment with the new application version, a new production (green) environment is created. When it’s time to release the new application, version traffic is routed from the blue environment to the green environment. So, if there are any problems, deployment can be easily rolled back.

- Rolling Updates:- This is a basic strategy that is about adding a new code to the existing deployment. The existing deployment becomes a heterogeneous pool of all the old versions and a new version, with the end goal of slowly replacing all old instances with new instances.

Kubernetes accommodates all the above-mentioned deployment strategies. We will look into the Rolling Updates because it guarantees a safe rollout while keeping the ability to revert, if necessary. Rolling Updates also have first-class support in Kubernetes that allow us to phase in a new version gradually.

Rolling Updates

In Kubernetes, rolling updates are the default strategy to update the running version of our app. So, Kubernetes runs a cluster of nodes, and each node consists of pods. The rolling update cycles the previous Pod out and brings the newer Pod in incrementally.

This is how rolling updates work.

This is our Kubernetes deployment file which specifies replica as 3 for demo-app

and the container image is pointing to AWS ECR.

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo-app

labels:

app: demo-app

spec:

replicas: 3

selector:

matchLabels:

app: demo-app

template:

metadata:

labels:

app: demo-app

spec:

containers:

- name: image

image: 73570586743739.dkr.ecr.us-west-2.amazonaws.com/demo-app:v1.0

imagePullPolicy: Always

ports:

- containerPort: 3001Now when we run

kubectl create -f demo-app.yml

This will create the deployment with 3 pods running and we can see the status as running.

kubectl get pods

NAME READY STATUS RESTARTS AGE

demo-app-1564180363-khku8 1/1 Running 0 14s

demo-app-1564180363-nacti 1/1 Running 0 14s

demo-app-1564180363-z9gth 1/1 Running 0 14sNow, if we need to update the deployment or need to push the new version out, assuming CI has already pushed the new image to ECR, we can just copy and update the image URL.

kubectl set image deployment.apps/demo-app image=73570586743739.dkr.ecr.us-west-2.amazonaws.com/demo-app:v2.0

The output is similar to:

>> deployment.apps/demo-app image updatedWe can also add the description to the update, so we would know what has changed.

kubectl annotate deployment.apps/demo-app kubernetes.io/change-cause="demo version changed from 1.0 to 2.0"

We can always check the status of the rolling update.

kubectl rollout status deployment.apps/demo-app

The output is similar to:

>> Waiting for rollout to finish: 2 out of 3 new replicas have been updated...

>> deployment "demo-app" successfully rolled outWe can see here that 2 out of 3 new pods are created and the old 2 pods are decommissioned. And, once all the pods are replaced, it shows a success message.

And finally, running get pods should now show only the new Pods:

kubectl get pods

NAME READY STATUS RESTARTS AGE

demo-app-1564180365-khku8 1/1 Running 0 14s

demo-app-1564180365-nacti 1/1 Running 0 14s

demo-app-1564180365-z9gth 1/1 Running 0 14sA rolling update offers a way to gradually deploy the new version of our application across the cluster. It replaces the pods during several phases. For example, we may replace 25% of the pods during the first phase, then another 25% during the next, and so on, until all are upgraded. Since the pods are not replaced all at once, this means that both versions will be live, at least for a short time, during the rollout.

So, we can achieve zero-downtime deployment using Kubernetes and we can deploy as many times as we want and our users will not be able to notice the difference.

However, even if we use Rolling updates, there is still a risk that our application will not work the way we expect it at the end of the deployment and in such a case, we need a rollback.

Rolling back a deployment

Sometimes, due to a breaking change, we may want to rollback a Deployment and Kubernetes By default maintain Deployment’s rollout history so that we can rollback anytime we want.

Suppose, we pushed a breaking change to production and tried deploying it

kubectl set image deployment.apps/demo-app image=73570586743739.dkr.ecr.us-west-2.amazonaws.com/demo-app:v3.0

kubectl annotate deployment.apps/demo-app kubernetes.io/change-cause="demo version changed from 2.0 to 3.0"

We can verify the rollout status:

kubectl rollout status deployment.apps/demo-app

The output is similar to this:

Waiting for rollout to finish: 1 out of 3 new replicas has been updated...Looking at the Pods created, we can see that all the Pods are stuck.

kubectl get pods

The output is similar to this:

NAME READY STATUS RESTARTS AGE

demo-app-1564180366-70iae 0/1 Running 0 3s

demo-app-1564180366-jbqqo 0/1 Running 0 3s

demo-app-1564180366-hysrc 0/1 Running 0 3sAs we can see, it says 0 out of 1 ready.

And in this case, we need to rollback the deployment to a stable version. To rollback, we need to check the rollout history.

kubectl rollout history deployment.apps/demo-app

The output is similar to this:

deployments "demo-app"

REVISION CHANGE-CAUSE

1 "from file demo-app.yml"

2 "demo version changed from 1.0 to 2.0"

3 "demo version changed from 2.0 to 3.0"It can be seen, that it shows revisions with change cause which we had added after updating the deployment, and in our case REVISION, 2 was stable.

We can rollback to a specific version by specifying it with --to-revision:

kubectl rollout undo deployment.apps/demo-app --to-revision=2

The output is similar to this:

deployment.apps/demo-app rolled backCheck if the rollback was successful and the Deployment is running as expected, run:

kubectl get deployment demo-app

The output is similar to this:

NAME READY UP-TO-DATE AVAILABLE AGE

demo-app 3/3 3 3 12sWe can check the status of pods as well.

kubectl get pods

The output is similar to this:

NAME READY STATUS RESTARTS AGE

demo-app-1564180365-khku8 1/1 Running 0 14s

demo-app-1564180365-nacti 1/1 Running 0 14s

demo-app-1564180365-z9gth 1/1 Running 0 14s